While AI has reshaped many industries, its deployment is not without challenges. We asked one of our AI experts, Tony Shepherd, to draw from his experience to identify common pitfalls in AI development, practical solutions to overcome these, and discuss ethical practices, such as the principles of Responsible AI.

As a Senior Consultant in the Data & Analytics team at twoday, Shepherd has not only developed and assessed different AI solutions but also critically evaluated their business benefits. Holding a Ph.D. in Computer Science from University College London, his work has for years been defined by a profound engagement with data and AI.

Artificial intelligence can now be deployed quickly and easily, but the hype curve may lead to disappointments and failures

The accessibility and quality of AI, particularly generative language models, have made experimenting with AI quick and straightforward. Shepherd sees both opportunities and threats in the easy deployment of generative AI:

"On one hand, this enables rapid solutions to queries and enhances productivity across business operations and communications”, Shepherd remarks.

Yet, he cautions against succumbing to the hype curve – a trajectory marked by inflated expectations leading to subsequent disappointments and setbacks. He underscores the longstanding journey of AI development, tracing back to the 1950s, to highlight a recurring pattern:

"History teaches us that AI's path is punctuated with consistent hurdles”, Shepherd says, “and eventually, we encounter the same challenges: a fundamental misunderstanding of the technology, leading to misguided decisions rooted in false assumptions”.

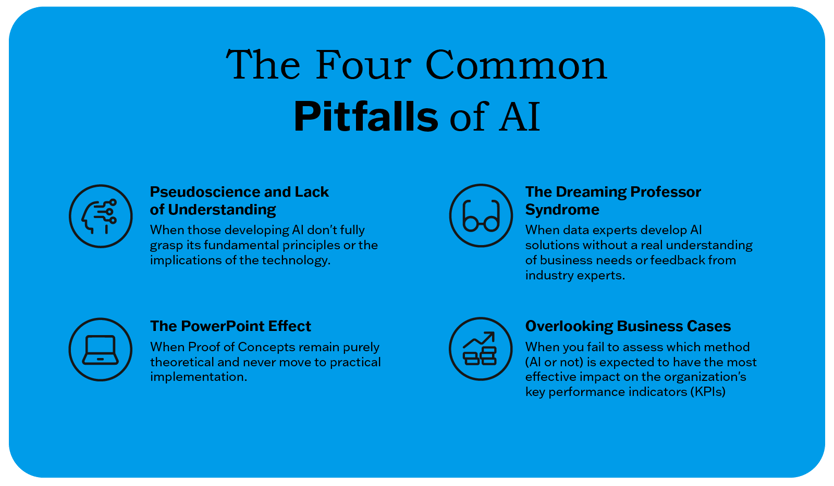

Avoiding the Four Common Pitfalls of AI

Shepherd highlights four common pitfalls in AI that many companies learn the hard way: Pseudoscience and a Lack of Understanding, the Dreaming Professor Syndrome, the PowerPoint effect, and Overlooking Business Cases.

🔶 Pseudoscience and Lack of Understanding

When those developing AI don't fully grasp its fundamental principles or the implications of the technology, it may lead to misleading or invalid solutions. Relying solely on business professionals without the necessary technical expertise is not enough – you should always involve data experts in AI development from the onset of experiments.

🔶 The Dreaming Professor Syndrome

Data experts often develop AI solutions without a real understanding of business needs or feedback from industry experts. This disconnect can result in technically proficient but practically misaligned solutions, missing the mark on the business' true objectives. To bridge this gap, it's crucial for industry professionals to engage in AI development from the beginning.

🔶 The PowerPoint Effect

Proof of Concept (PoC) projects that remain purely theoretical and never move to practical implementation waste both time and resources. When experiments halt in the boardroom, it breeds dissatisfaction among stakeholders, eroding trust in AI's capabilities. To counter this, it's essential to ensure that each PoC project includes clear, actionable steps to move theoretical plans to concrete action, including responsibilities for integration and maintenance of an AI module in the wider IT and process landscape.

🔶 Overlooking Business Cases

Shepherd emphasizes the importance of understanding AI's influence on an organization's key performance indicators (KPIs) and evaluating which method (AI or not) is expected to impact those most effectively. Clear business goals and the ability to measure the impacts of AI are the only way for companies to ensure that their investment in AI translates into tangible business benefits.

However, even if AI meets set goals and significantly boosts efficiency, the importance of ethical considerations shouldn’t be overlooked. As AI increasingly affects our daily lives, it's essential to identify and manage the associated risks. Responsible use of AI requires careful assessment of the technology's impacts on people and the environment. This includes ensuring transparency, promoting non-discrimination, and protecting privacy.

Responsible AI in Practice and the Crucial Role of Subject Matter Experts

Given the technological advancements and AI's growing influence on our daily lives, the principles of responsible use should not be overlooked.

According to Shepherd, the role of subject matter experts is central to developing and using AI responsibly. It's positive that more and more professionals are becoming interested in AI, as their knowledge and perspectives are indispensable for designing and implementing AI solutions responsibly and efficiently.

What Does Responsible AI Mean? Seven Key Requirements

The European Commission has identified seven key requirements in the Ethics Guidelines for Trustworthy Artificial Intelligence (AI) to help organizations recognize and mitigate the risks of using AI:

- Human Agency and Oversight;

- Technical Robustness and Safety;

- Privacy and Data Governance;

- Transparency;

- Diversity, Non-discrimination and Fairness;

- Environmental and Societal well-being; and

- Accountability

The Seven Key Areas of Responsible AI in Practice

Tony Shepherd has not only developed and evaluated numerous AI applications but has also led AI projects, for instance, in the customer service sector.

He offers insights from a recent initiative where AI enhanced the operations of a large customer service centre, particularly in handling questions from customers. In the centre, where hundreds of professionals handle over two million calls and over 500,000 chat messages annually, the responsibility of AI is paramount.

When dealing with large numbers of customers and sensitive inquiries, there is no room for a "black box" of AI that provides information that seems accurate but goes unchecked and can be largely incorrect.

Shepherd offers insights from a recent initiative where AI enhanced the operations of a large customer service centre, particularly in handling questions from customers. In the centre, where hundreds of professionals handle over two million calls and over 500,000 chat messages annually, the responsibility of AI is paramount.

1. Human Agency and Oversight 🧐

Shepherd particularly emphasizes human agency in guiding AI. In the customer service project, the AI solution was analysed and trained to distinguish accurate AI responses from inaccurate, ensuring efficient and appropriate operation. The human role is central in this process, with staff actively directing and supervising the AI's performance.

2. Technical Strength and Safety 🔧

To ensure the technical reliability of AI agents, they can be evaluated with various performance metrics and retrained as needed. This is achieved by collecting data from each question and answer.

In the customer service case, the gathered data offered valuable insights into the AI’s performance. This data allowed for fine-tuning the AI agents, improving their accuracy and reliability. In this case, AI agents were retrained to better recognize and respond to customer needs using the collected data and feedback from testers and customer service professionals.

Repeated tests and feedback also enable continuous quality control: issues are quickly identified and corrected, strengthening technical reliability and safety.

3. Privacy and Data Management 🔐

When using sensitive customer service centre emails for training data, it's critically important to ensure that all personal information is appropriately protected and that data processing complies with data protection legislation, such as the GDPR.

Additionally, users, such as customer service professionals, must be able to understand how AI uses and processes data. This promotes transparency and helps ensure that AI only uses appropriate and approved information.

4. Transparency and Explainability 📊

The logic and decision-making processes of AI should be opened up and explained to users. This can be done through visualizations, reports, discussions on the root causes of inaccurate responses, or explanations visible in the user interface.

The "explainability" of AI is important so users understand why AI arrives at certain decisions. For instance, if AI assesses a particular email message as important and worth responding to, it should be able to explain this decision, perhaps by referring to urgency-indicating vocabulary in the message, or the customer's previous communication history and purchasing behaviour.

This also helps to ensure that AI does not act discriminatorily or make decisions based on, for example, prejudices or distorted data.

5. Diversity, Non-Discrimination, and Fairness ⚖️

In customer service, AI must treat all customer messages impartially, regardless of the customer's background or the type of question.

This requires continuous evaluation and adaptation of AI solutions to ensure they do not favour or discriminate against any customer segment or question type. This is particularly important considering AI's potential to impact different demographic groups.

6. Environmental and Societal Well-being and Responsibility 🌍

While the customer service example does not directly address the environmental or societal impacts of AI, responsible use of AI implicitly includes considering these factors, according to Shepherd.

Artificial intelligence solutions should promote environmental sustainability and societal well-being, for instance, by minimizing resource wastage and increasing the availability of services and information to more sectors of society.

7. Accountability 🚩

Accountability is inherent in all practices mentioned above. The use of AI in customer service centres requires that solutions are responsibly designed and implemented.

This means there should be clear policies for data usage, transparency in AI decision-making, and ongoing monitoring and evaluation to ensure the impact and performance of AI.

For example, for a customer service centre to be accountable for its use of AI, it should have a clear understanding of what the AI is doing, how it is doing it and what its limitations are. This understanding naturally develops when the team is involved in the project from the beginning: customizing, testing, and implementing the AI solution for a specific process.

For a customer service centre to be accountable for its use of AI, it should have a clear understanding of what the AI is doing, how it is doing it and what its limitations are. This understanding naturally develops when the team is involved in the project from the beginning: customizing, testing, and implementing the AI solution for a specific process.

Responsible AI Safeguards Companies and Increases Trust

The responsible deployment of AI is not merely a technical or financial concern; it's an ethical decision reflecting an organization's core values. A responsible company employs AI in a responsible manner.

Ethical considerations offer additional benefits. Shepherd's case exemplifies that when the principles of responsible AI are integrated into AI development and usage, it becomes both a safeguard and a competitive edge for the company.

"Principles of responsible AI protect the company and customers from potential risks and strengthen trust, building long-term value," says Shepherd.

About the Author: Saara Bergman is a marketing expert with experience in IT consulting, business process management, and integration platforms. Saara works as a Content Marketing Specialist at twoday.